Feel Like a Number: Perceived Fairness of the Job Application Process through AI

by Nirvi Maru

Thesis supervisor: dr. Theo Araujo

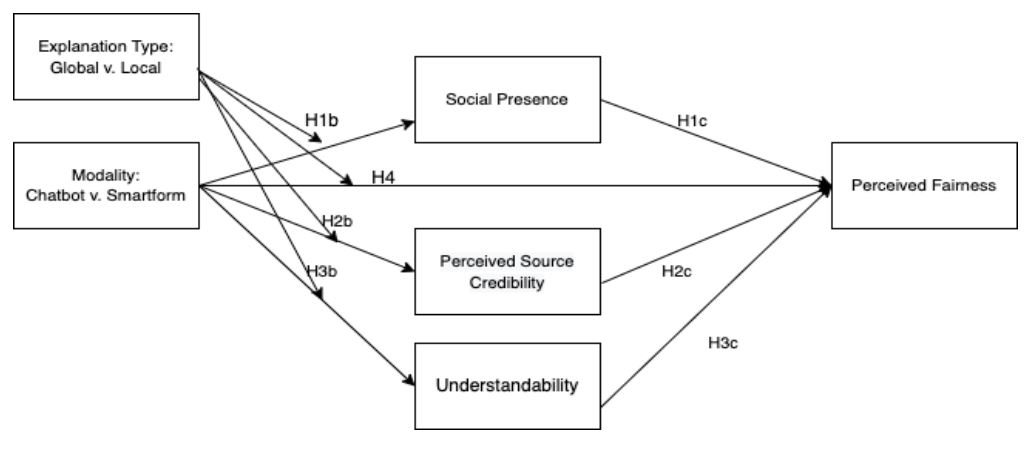

Artificial Intelligence (AI) in the form of automated decision systems (ADS) are increasingly being used in the recruitment field for personnel selection. The discussions around the pros and cons of the reliance on decisions made exclusively by algorithms are profuse (Araujo et al, 2018, Helberger, Araujo & de Vreese, 2020). A dominant theme recurring in these discussions is the concept of ‘fairness’ in ADM (Araujo et. al, 2020, Shin 2020), especially amongst the applicants, as one of the critical indicators for the acceptance of this evolution (Kim& Philips, 2021, Helberger, Araujo & de Vreese, 2020, Van den Bos et al., 2003). This study endeavored to explore the impact of the affordances of the medium of AI-based algorithm (referred to as modalities) – chatbot vs. smart forms, and types of explanations provided for the decision output by the algorithm (XAI) – global vs. local, on an applicant’s perceived fairness of the process.

Methods

The study implemented an experiment to observe the effects of modality and explanation on perceived fairness of job applicants, through social presence, perceived source credibility, understandability and digital literacy as mediators. The experiment was conducted using an online survey.

All participants (N= 272) were first exposed to a job advertisement, indicating the vacancy for a position, to which the participants were to imagine applying to. Then they were randomly assigned to one of the four conditions, in which they interacted either with a chatbot or a smartform as part of the application process, and subsequently received either a global or a local explanation for the decision outcome provided by the algorithm.

The smartform conditions comprised a form programmed in Qualtrics that displayed questions to participants based on their response to the previous question. The chatbot conditions consisted of a chatbot programmed using Google Dialogflow and Conversational Agent Research Toolkit (CART). Explanation types were programmed in Qualtrics for the smartform, and using CART for the chatbot. Interaction with either modality functioned as the interview for the job. The questions posed and their order were the same across both modalities. Explanations received by the applicants for the decision made by the algorithm at the end of their interaction varied. Local explanations explained a single case, which in this case was the applicant’s case, eg. “Alex is not selected for the job due to suboptimal performance in decision factor: communication skill rating”. Global explanations explained how the model worked, with the factors critical to the decision outcome, eg. “JobMax considers an applicant’s qualifications with numerous other data points. Prior experience, contract type, communication skill rating, and situational judgement are the most important factors considered in this model. Poor performance in two or more of these factors results in a rejection”. After that, pariticpants’ perception of social presence, perceived source credibility, understandability and perceived fairness were measured via survey.

Results

Contrary to expectations, the direct effect of chatbots on perceived fairness was negative. This finding is interesting as interaction with the chatbot is considered to be more akin to how humans naturally interact (Zerilli et al., 2020), compared to smartforms.

The interaction effect of modality and explanation type was positive and significant on social presence. Social presence is a significant mediator between modality and perceived fairness. This indicates that the perception of interacting with another being (Short et al. 1976) is an important factor in this paradigm.

Surprisingly, local explanations garnered a positive and significant effect even when emanated from the smartform, which could mean that applicants prefer feedback and information focused on themselves rather than the process as a whole, in any modality. This is enlightening for the explainability literature that generally claims the algorithmic output of global explanations being better in terms of transparency and completeness (Liem et al.,2020). Local explanations are more comprehensible in this paradigm and are a better fit for this purpose.

This study elucidates a mechanism of how participant perceptions can be impacted in a high stakes’ context such as job recruitment. With AI becoming increasingly pervasive in our lives, and many decision- making processes either fully or partially carried out by algorithms, increasing awareness of their influence is crucial. This study aims to add to the growing field of Human Machine Communication (HCM) research (Guzman & Lewis, 2019), serving as an exemplification of human behavior (CASA model, Reeves & Nass, 1996) intersected with communication features of algorithmic output (Explainable AI, XAI, FATE; Shin, 2021) and concepts of technological affordance (MAIN model; Sundar, 2008).

References

Araujo, T., De Vreese, C., Helberger, N., Kruikemeier, S., van Weert, J., Bol, N., … & Taylor, L. (2018). Automated decision-making fairness in an AI-driven world: Public perceptions, hopes and concerns. Digital Communication Methods Lab.

Araujo, T. (2020). Conversational Agent Research Toolkit: An alternative for creating and managing chatbots for experimental research1. Computational Communication Research, 2(1), 35-51.

Araujo, T., Helberger, N., Kruikemeier, S., & De Vreese, C. H. (2020). In AI we trust? Perceptions about automated decision-making by artificial intelligence. AI & SOCIETY, 35(3), 611-623.

Helberger, N., Araujo, T., & de Vreese, C. H. (2020). Who is the fairest of them all? Public attitudes and expectations regarding automated decision-making. Computer Law & Security Review, 39, 105456.

Kim, B., & Phillips, E. (2021). Humans’ Assessment of Robots as Moral Regulators: Importance of Perceived Fairness and Legitimacy. arXiv preprint arXiv:2110.04729.

Liem, C. C., Langer, M., Demetriou, A., Hiemstra, A. M., Wicaksana, A. S., Born, M. P., & König, C. J. (2018). Psychology meets machine learning: Interdisciplinary perspectives on algorithmic job candidate screening. In Explainable and interpretable models in computer vision and machine learning (pp. 197-253). Springer, Cham.

Lewis, S. C., Guzman, A. L., & Schmidt, T. R. (2019). Automation, journalism, and human–machine communication: Rethinking roles and relationships of humans and machines in news. Digital Journalism, 7(4), 409-427.

Reeves, B., & Nass, C. (1996). The media equation: How people treat computers, television, and new media like real people. Cambridge, UK, 10, 236605.

Shin, D. (2020). How do users interact with algorithm recommender systems? The interaction of users, algorithms, and performance. Computers in Human Behavior, 109, 106344.

Shin, D. (2021). The effects of explainability and causability on perception, trust, and acceptance: Implications for explainable AI. International Journal of Human-Computer Studies, 146, 102551.

Short, J., Williams, E., & Christie, B. (1976). The social psychology of telecommunica- tions. John Wiley & Sons.

Sundar, S. S. (2008). The MAIN model: A heuristic approach to understanding technology effects on credibility (pp. 73-100). MacArthur Foundation Digital Media and Learning Initiative.

Van den Bos, K., Maas, M., Waldring, I. E., & Semin, G. R. (2003). Toward understanding the psychology of reactions to perceived fairness: The role of affect intensity. Social Justice Research, 16(2), 151-168.

Zerilli, John, Alistair Knott, James Maclaurin, and Colin Gavaghan. “Transparency in algorithmic and human decision-making: is there a double standard?.” Philosophy & Technology 32, no. 4 (2019): 661-683.